Abstract

The purpose of this study was to analyze the item structure of the General Knowledge Subtest in the Jouve Cerebrals Crystallized Educational Scale (JCCES) using multidimensional scaling (MDS) analyses. The JCCES was developed as a more efficient assessment of cognitive abilities by implementing a stopping rule based on consecutive errors. The MDS analyses revealed a horseshoe-shaped scaling of items in the General Knowledge Subtest, indicating a continuum wherein the constraints for dissimilarities have all been supported. The two-dimensional scaling solution for the General Knowledge Subtest indicates that the items are well-aligned with the construct being assessed. Limitations of the study, including the sample size and assumptions made in the MDS analyses, are discussed.

Keywords: Jouve-Cerebrals Crystallized Educational Scale, General Knowledge Subtest, multidimensional scaling, stopping rule, cognitive abilities, item structure

Introduction

Psychometric tests have been used for decades to assess cognitive abilities in various domains (Bors & Stokes, 1998; Deary, 2000). However, lengthy tests have been associated with several issues, including fatigue, boredom, and inaccuracy in results (Sundre & Kitsantas, 2004). To address these issues, the Cerebrals Cognitive Ability Tests (CCAT) were revised, resulting in the development of the Jouve-Cerebrals Crystallized Educational Scale (JCCES). One modification made to the JCCES was implementing a stopping rule after a certain number of consecutive errors, a technique used in some Wechsler subtests and the Reynolds Intellectual Assessment Scale (RIAS) (Wechsler, 2008; Reynolds & Kamphaus, 2003). The purpose of this study was to analyze the item structure of the General Knowledge Subtest in the JCCES, specifically examining the two-dimensional scaling solution using multidimensional scaling (MDS) analyses.

Method

The use of Rasch analysis to estimate item difficulty parameters is a well-established technique in psychometrics (Wright & Stone, 1979). Similarly, the adoption of a stopping criterion based on consecutive errors is a technique used in other cognitive ability tests, such as the Wechsler Adult Intelligence Scale (WAIS) and the Kaufman Assessment Battery for Children (KABC) (Wechsler, 2008; Kaufman & Kaufman, 1983). The present study administered the JCCES General Knowledge Subtest to 588 participants and implemented a stopping criterion of five consecutive errors after determining that three consecutive errors were inappropriate. The rearrangement of items based on Rasch estimates allowed for the examination of the item structure in a more systematic and objective manner. MDS analyses were then conducted to explore the underlying structure of the item response data.

Results

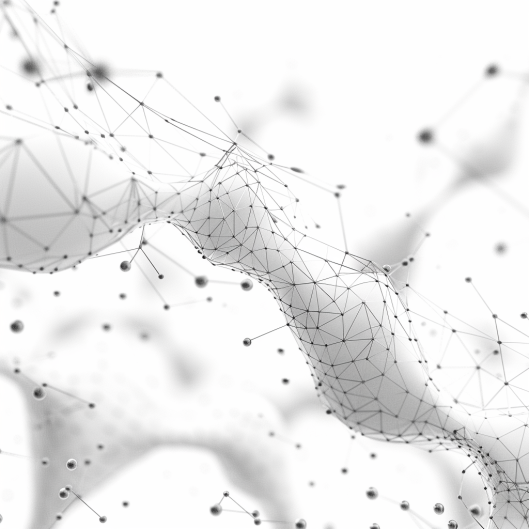

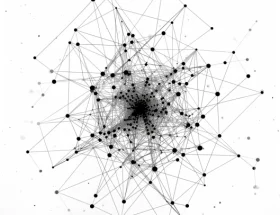

As shown in Figure 1, the present study’s MDS analyses produced a two-dimensional scaling solution for the General Knowledge Subtest with a Kruskal’s Stress of .18 and a squared correlation (RSQ) of .87. The horseshoe-shaped scaling pattern of the items indicates a continuum of difficulty levels, with the constraints for dissimilarities supported. This pattern is consistent with the concept of item difficulty in psychometric testing (Lord & Novick, 1968) and supports the validity of the test in measuring cognitive abilities. These findings also suggest that the implementation of a stopping rule based on consecutive errors is an effective way to improve the efficiency of the cognitive ability test.

Figure 1. Multidimensional Scaling (MDS) of the General Knowledge subtest items.

Note. N = 588.

Note. N = 588.Discussion

The results of this study show the benefits of implementing a stopping rule to improve the efficiency of cognitive ability tests. The horseshoe-shaped scaling pattern observed in the General Knowledge Subtest aligns well with the concept of item difficulty in psychometric testing. However, the limitations of this study should be acknowledged. The sample size of 588 is relatively small for this type of analysis, and caution should be taken when generalizing the findings to other populations (Hair et al., 1998). Additionally, the selection of the stopping criterion at five consecutive errors was determined based on the current sample and may not be optimal for all populations. Methodological limitations, such as the assumptions of linearity and homoscedasticity in the MDS analyses, may have influenced the results.

Conclusion

The JCCES provides a more efficient assessment of cognitive abilities, with the General Knowledge Subtest demonstrating a horseshoe-shaped scaling pattern indicative of a continuum of difficulty levels. The two-dimensional scaling solution indicates that the items are well-aligned with the construct being assessed. Although there are limitations to the study, these findings provide valuable insights into the item structure of the JCCES General Knowledge Subtest and support the use of a stopping rule based on consecutive errors to improve the efficiency of the test. Future research could explore the generalizability of the findings to larger and more diverse samples, as well as investigate the optimal stopping criterion for different populations.

References

Bors, D. A., & Stokes, T. L. (1998). Raven’s Advanced Progressive Matrices: Norms for first-year university students and the development of a short form. Educational and Psychological Measurement, 58(3), 382–398. https://doi.org/10.1177/0013164498058003002

Collins, L. M., & Cliff, N. (1990). Using the longitudinal Guttman simplex as a basis for measuring growth. Psychological Bulletin, 108(1), 128–134. https://doi.org/10.1037/0033-2909.108.1.128

Deary, I. J. (2000). Looking down on human intelligence: From psychometrics to the brain. Oxford, UK: Oxford University Press. https://doi.org/10.1093/acprof:oso/9780198524175.001.0001

Guttman, L. (1950). The basis for scalogram analysis. In S. A. Stouffer, L. Guttman, E. A. Suchman, P. F. Lazarsfield, S. A. Star, & J. A. Clausen (Eds.), Measurement and prediction (pp. 60–90). Princeton, NJ: Princeton University Press.

Hair, J. F., Anderson, R. E., Tatham, R. L., & Black, W. C. (1998). Multivariate data analysis (5th ed.). Upper Saddle River, NJ: Prentice Hall.

Kaufman, A. S., & Kaufman, N. L. (1983). Kaufman Assessment Battery for Children. Circle Pines, MN: American Guidance Service.

Lord, F. M., & Novick, M. R. (1968). Statistical theories of mental test scores. Reading, MA: Addison-Wesley.

Reynolds, C. R., & Kamphaus, R. W. (2003). Reynolds Intellectual Assessment Scales (RIAS) and the Reynolds Intellectual Screening Test (RIST), Professional Manual. Lutz, FL: Psychological Assessment Resources.

Sundre, D. L., & Kitsantas, A. (2004). An exploration of the psychology of the examinee: Can examinee self-regulation and test-taking motivation predict consequential and non-consequential test performance? Contemporary Educational Psychology, 29(1), 6–26. https://doi.org/10.1016/S0361-476X(02)00063-2

Wechsler, D. (2008). Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV). San Antonio, TX: Pearson. https://doi.org/10.1037/t15169-000

Wright, B. D., & Stone, M. H. (1979). Best test design: Rasch measurement. Chicago, IL: MESA Press.